Which statement about machine learning is true? This question often pops up when people are first getting into this fascinating field. Machine learning, in a nutshell, is all about teaching computers to learn from data without explicit programming.

It’s like giving a computer a giant stack of books and asking it to figure out how to write its own story. It’s a powerful tool with a lot of potential, but it’s also important to understand the basics to make sense of all the hype.

Machine learning algorithms are used in a wide range of applications, from recommending movies on Netflix to detecting fraud in financial transactions. But how does it actually work? The core idea is that these algorithms can learn patterns from data and then use those patterns to make predictions or decisions.

For example, a machine learning algorithm could be trained on a dataset of images of cats and dogs to learn the features that distinguish one from the other. Once trained, it could then be used to identify new images as either cats or dogs.

Machine Learning Fundamentals

Machine learning is a powerful field of computer science that enables systems to learn from data without explicit programming. It empowers computers to identify patterns, make predictions, and improve performance over time. This learning process is achieved through algorithms that analyze data and adjust their parameters accordingly.

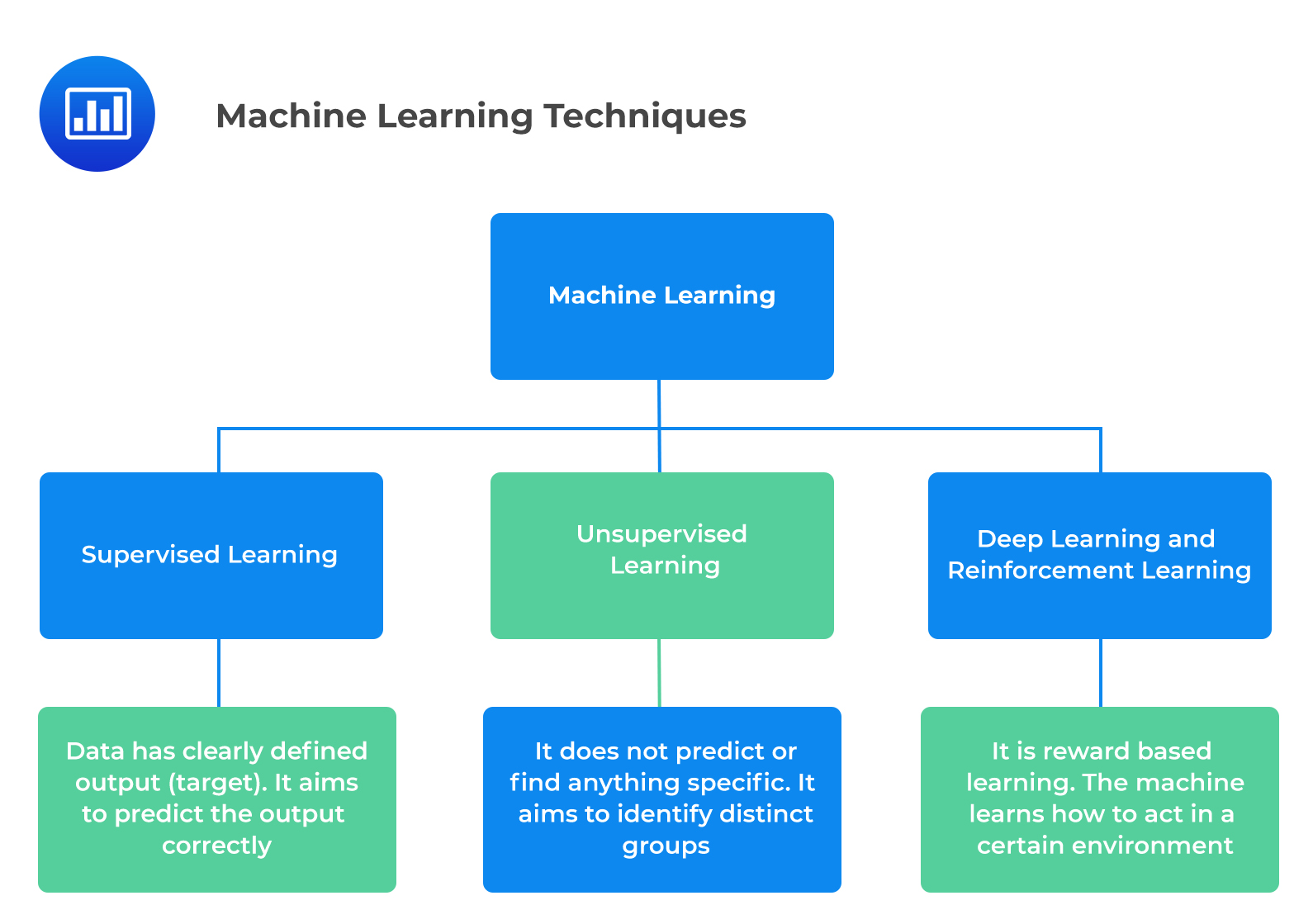

Types of Machine Learning

Machine learning encompasses various approaches, each tailored to specific learning tasks. The three main categories are supervised, unsupervised, and reinforcement learning.

- Supervised learninginvolves training a model on labeled data, where each input is associated with a known output. This allows the model to learn the relationship between inputs and outputs and make predictions on new, unseen data. For instance, a spam filter can be trained on labeled emails, identifying spam and legitimate emails.

- Unsupervised learningdeals with unlabeled data, where the model seeks to discover patterns and structures within the data without explicit guidance. This is useful for tasks like clustering, anomaly detection, and dimensionality reduction. For example, a customer segmentation algorithm can group customers based on their purchasing behavior without prior knowledge of customer types.

- Reinforcement learninginvolves training an agent to interact with an environment and learn through trial and error. The agent receives rewards for desired actions and penalties for undesirable ones, optimizing its behavior over time. A classic example is a game-playing AI, where the agent learns to play a game by receiving rewards for winning and penalties for losing.

Real-World Applications

Machine learning has revolutionized various industries, offering solutions to complex problems and enhancing efficiency. Here are some real-world examples:

- Healthcare:Machine learning is used for disease diagnosis, drug discovery, and personalized medicine. For instance, image recognition algorithms can analyze medical images to detect cancer cells, while predictive models can predict patient outcomes based on their medical history.

- Finance:Machine learning is employed for fraud detection, risk assessment, and algorithmic trading. Fraud detection systems can analyze transactions to identify suspicious activities, while risk assessment models can evaluate loan applications based on various factors.

- E-commerce:Machine learning is used for product recommendations, personalized marketing, and customer service. Recommendation systems suggest products based on user preferences, while chatbots provide automated customer support.

- Transportation:Machine learning is used for autonomous vehicles, traffic management, and route optimization. Self-driving cars use sensors and algorithms to navigate roads, while traffic management systems optimize traffic flow based on real-time data.

Traditional Programming vs. Machine Learning

Traditional programming involves explicitly defining instructions for a computer to follow, while machine learning focuses on enabling computers to learn from data and make decisions based on patterns identified.

Traditional programming: Explicit instructions, deterministic output.

Machine Learning: Data-driven learning, probabilistic output.

Traditional programming is well-suited for tasks with well-defined rules and predictable outcomes, while machine learning excels in handling complex, ambiguous problems where explicit rules are difficult to define.

2. Data Requirements and Preparation

Data is the lifeblood of machine learning. Just like a chef needs the right ingredients to create a delicious meal, machine learning models need high-quality data to function effectively. In this section, we’ll delve into the crucial aspects of data requirements and preparation, laying the foundation for successful model training.

Data Quality and Importance

High-quality data is paramount for building accurate and reliable machine learning models. Imagine trying to bake a cake with spoiled ingredients; the outcome would be disastrous. Similarly, feeding a machine learning model with inaccurate or incomplete data will result in a model that produces unreliable predictions.

Data quality significantly impacts model performance in several ways:* Accuracy:Inaccurate data leads to inaccurate predictions. Imagine a model trained on data with incorrect labels; it will likely misclassify new data.

Bias

Biased data can lead to biased models, which can perpetuate existing societal inequalities. For example, a model trained on data with gender or racial biases might make unfair decisions.

Generalizability

Data that doesn’t represent the real-world scenarios the model will encounter will lead to poor generalizability. A model trained on a limited dataset might struggle to make accurate predictions on new, unseen data.Common data quality issues include:* Missing values:Gaps in the data can lead to incomplete information and inaccurate predictions.

Inconsistencies

Data with inconsistent formatting or conflicting information can confuse the model.

Outliers

Extreme values that deviate significantly from the rest of the data can distort the model’s learning process.

Data Preprocessing Techniques, Which statement about machine learning is true

Data preprocessing is a crucial step before model training, ensuring the data is clean, consistent, and suitable for the learning algorithm. Think of it as preparing your ingredients before cooking.

Data Cleaning

Data cleaning involves addressing issues like missing values, inconsistencies, and duplicates to ensure data accuracy and consistency. * Handling Missing Values:

Imputation

Replacing missing values with estimated values based on other data points. For example, replacing missing age values with the average age of similar individuals.

Deletion

Removing data points with missing values. This approach is suitable if the missing data is insignificant or if the dataset is large enough to tolerate the loss.* Dealing with Inconsistencies and Errors:

Data Standardization

Ensuring data consistency by converting values to a uniform format. For example, converting date formats to a standard format like YYYY-MM-DD.

Data Validation

Checking for inconsistencies and errors using data validation rules. For example, ensuring that age values are within a reasonable range.* Removing Duplicate Data:

Identifying and removing duplicate data points to avoid bias and redundancy in the dataset.

Data Normalization

Data normalization is a technique used to scale data values to a specific range, typically between 0 and 1. This process helps improve the performance of machine learning algorithms, especially those sensitive to the scale of the data.* Benefits of Normalization:

Improved model performance

By bringing all features to a similar scale, normalization prevents features with larger scales from dominating the learning process.

Faster convergence

Normalization can speed up the training process by reducing the number of iterations required for the algorithm to converge.* Normalization Methods:

Min-Max Scaling

Rescaling values to a range between 0 and 1 using the formula:

(value

- min) / (max

- min)

Standardization

Transforming data to have a mean of 0 and a standard deviation of 1 using the formula:

(value

mean) / standard deviation

Feature Engineering

Feature engineering involves transforming and selecting features to improve model performance. Think of it as finding the best ingredients and preparing them in the most suitable way for your dish.* Feature Selection:

Removing Irrelevant Features

Eliminating features that don’t contribute to the model’s predictive power. This reduces complexity and improves model efficiency.* Feature Extraction:

Creating New Features from Existing Ones

Combining or transforming existing features to create new ones that capture more information or patterns. For example, creating a new feature called “age_group” by grouping age values into categories.* Feature Transformation:

Converting Categorical Features into Numerical Ones

Converting categorical features, like “color” or “gender,” into numerical values that the model can understand. This can be done using techniques like one-hot encoding or label encoding.

Data Exploration and Visualization

Data exploration and visualization are essential for understanding the characteristics of the data and identifying potential problems. Think of it as inspecting your ingredients before starting to cook.* Common Data Visualization Techniques:

Histograms

Visualizing the distribution of a single variable.

Scatter Plots

Visualizing the relationship between two variables.

Box Plots

Summarizing the distribution of a variable, including its median, quartiles, and outliers.* Applications of Data Visualization:

Identifying Patterns and Trends

Visualizations can reveal patterns, trends, and relationships within the data that might not be obvious from raw data.

Detecting Outliers

Outliers can be identified visually, helping to address potential data quality issues.

Understanding Data Distributions

Visualizations help understand the distribution of variables, revealing skewness or unusual patterns.* Identifying Potential Problems:

Skewed Distributions

Visualizations can reveal skewed distributions, indicating potential biases in the data.

Missing Values

Visualizations can highlight missing values, prompting further investigation and handling.

3. Model Training and Evaluation

Now that we have a good understanding of the fundamentals of machine learning and how to prepare our data, let’s dive into the heart of the process: model training and evaluation. This is where we take our prepared data and use it to build and assess a machine learning model that can make predictions on unseen data.

3.1 Model Training

Model training is the process of feeding a machine learning algorithm with our prepared data so it can learn the underlying patterns and relationships. Let’s take the example of Logistic Regression, a popular algorithm for classification tasks.

Data Preparation

Before we can train our Logistic Regression model, we need to prepare our dataset. This involves several steps:

- Data Cleaning:Removing any inconsistencies, missing values, or outliers in the data. This ensures the model learns from clean and accurate information.

- Feature Engineering:Transforming raw features into a form that is more suitable for the model. This might involve creating new features based on existing ones, scaling numerical features, or encoding categorical features.

- Splitting into Training and Validation Sets:Dividing the data into two parts: the training set, used to train the model, and the validation set, used to evaluate the model’s performance on unseen data.

Model Initialization

Once our data is prepared, we initialize the Logistic Regression model with appropriate parameters. These parameters define the initial state of the model and influence how it learns from the data. Some common parameters include:

- Regularization:A technique used to prevent overfitting, where the model learns the training data too well and performs poorly on new data. Common regularization techniques include L1 and L2 regularization.

- Learning Rate:Controls how quickly the model adjusts its parameters during training. A higher learning rate can lead to faster convergence but might overshoot the optimal solution. A lower learning rate can be slower but more accurate.

Training Process

The training process involves iteratively feeding the model with the training data and adjusting its parameters to minimize a cost function. The cost function measures the difference between the model’s predictions and the actual target values. The goal of the training process is to find the set of parameters that minimizes this cost function.

The cost function is a mathematical function that quantifies the error of the model’s predictions. It is typically chosen to be differentiable, allowing us to use optimization algorithms to find the minimum.

The training process uses optimization algorithms, such as gradient descent, to update the model’s parameters in the direction that minimizes the cost function.

Model Evaluation

After training, we evaluate the model’s performance on the validation set. This helps us assess how well the model generalizes to unseen data. Common evaluation metrics for classification tasks include accuracy, precision, recall, and F1-score.

It’s true that machine learning algorithms can improve over time with more data. But figuring out why you’re struggling with Spanish is a bit different. You might want to check out this article on why can’t I learn Spanish for some insights.

Once you understand the reasons behind your difficulties, you can start to address them and hopefully see improvement in your language learning. And remember, just like machine learning, practice and persistence are key to success!

3.2 Model Evaluation Metrics

Here’s a table comparing different evaluation metrics commonly used for machine learning models:

| Metric | Definition | Interpretation |

|---|---|---|

| Accuracy | The proportion of correctly classified instances. | Measures the overall correctness of the model. |

| Precision | The proportion of correctly predicted positive instances out of all instances predicted as positive. | Measures the model’s ability to avoid false positives. |

| Recall | The proportion of correctly predicted positive instances out of all actual positive instances. | Measures the model’s ability to identify all true positive instances. |

| F1-Score | The harmonic mean of precision and recall. | Provides a balanced measure of precision and recall. |

| AUC (Area Under the Curve) | The area under the Receiver Operating Characteristic (ROC) curve. | Measures the model’s ability to distinguish between positive and negative instances. |

The choice of evaluation metric depends on the specific problem and business objectives. For example, in a medical diagnosis scenario, recall might be more important than precision, as we want to avoid missing any true positive cases. On the other hand, in a spam detection system, precision might be more important, as we want to minimize false positives that might lead to legitimate emails being marked as spam.

3.3 Optimal Model Selection and Hyperparameter Tuning

Selecting the optimal model for a given problem involves considering several factors:

Model Complexity

There is a trade-off between model complexity and performance. A more complex model can potentially capture more intricate patterns in the data but is more prone to overfitting. A simpler model might be less prone to overfitting but might not capture all the relevant patterns.

Data Characteristics

The characteristics of the data can influence the choice of model. For example, if the data is highly non-linear, a non-linear model like a Decision Tree or Random Forest might be more appropriate than a linear model like Logistic Regression.

Business Objectives

The business objectives should guide the model selection process. For example, if the goal is to maximize revenue, a model that prioritizes precision might be preferred. If the goal is to minimize costs, a model that prioritizes recall might be more suitable.

Hyperparameter Tuning

Hyperparameters are parameters that are not learned from the data but are set before training. Tuning these hyperparameters can significantly improve the model’s performance. Common techniques for hyperparameter tuning include:

- Grid Search:Exhaustively searching over a grid of hyperparameter values.

- Random Search:Randomly sampling hyperparameter values from a distribution.

- Bayesian Optimization:Using a probabilistic model to guide the search for optimal hyperparameters.

Once we have trained and tuned multiple models, we can evaluate their performance using the chosen evaluation metrics and select the model that performs best on the validation set.

Types of Machine Learning Algorithms

Now that we understand the fundamentals of machine learning, data preparation, and model evaluation, let’s delve into the diverse world of machine learning algorithms. These algorithms are the heart of any machine learning system, enabling computers to learn from data and make predictions.

Supervised Learning Algorithms

Supervised learning algorithms are trained on labeled data, meaning each data point has a corresponding output or target value. These algorithms learn the relationship between inputs and outputs and use this knowledge to predict the output for new, unseen data.

- Linear Regression:This algorithm predicts a continuous target variable based on a linear relationship with one or more input variables. It aims to find the best-fit line that minimizes the difference between predicted and actual values.

For example, predicting house prices based on size and location.

- Logistic Regression:This algorithm is used for binary classification problems, where the target variable has two possible outcomes. It uses a sigmoid function to predict the probability of an event occurring.

For instance, predicting whether a customer will click on an ad based on their browsing history.

- Decision Trees:This algorithm builds a tree-like structure to make predictions based on a series of decisions. Each node in the tree represents a feature, and each branch represents a possible value of that feature.

For example, predicting whether a loan application will be approved based on factors like income, credit score, and loan amount.

- Support Vector Machines (SVMs):This algorithm finds the optimal hyperplane that separates data points into different classes. It aims to maximize the margin between the hyperplane and the closest data points of each class.

For example, classifying images of handwritten digits.

Unsupervised Learning Algorithms

Unsupervised learning algorithms are trained on unlabeled data, meaning there are no corresponding output values. These algorithms aim to discover patterns and relationships within the data itself.

- Clustering:This algorithm groups data points into clusters based on their similarity. Different clustering algorithms use different distance metrics and methods to define clusters.

For example, segmenting customers into different groups based on their purchasing behavior.

- Dimensionality Reduction:This algorithm reduces the number of features in a dataset while preserving as much information as possible. It can be used to simplify data, improve model performance, and make data visualization easier.

For example, reducing the number of features in a dataset of customer reviews to identify the most important factors influencing customer satisfaction.

Reinforcement Learning Algorithms

Reinforcement learning algorithms learn through trial and error, interacting with an environment to maximize a reward signal. They are often used in applications where a system needs to learn how to perform a complex task through experience.

- Q-Learning:This algorithm learns an optimal policy by estimating the expected reward for taking a specific action in a given state.

For example, training a robot to navigate a maze by rewarding it for reaching the goal and penalizing it for hitting obstacles.

Comparing Machine Learning Algorithms

| Algorithm Name | Type | Application | Advantages | Disadvantages |

|---|---|---|---|---|

| Linear Regression | Supervised | Predicting continuous target variables | Simple to understand and implement, interpretable results | Assumes linear relationship between variables, sensitive to outliers |

| Logistic Regression | Supervised | Binary classification | Interpretable results, efficient for large datasets | Assumes linear decision boundary, not suitable for complex datasets |

| Decision Trees | Supervised | Classification and regression | Easy to understand and interpret, can handle non-linear relationships | Prone to overfitting, can be unstable with small changes in data |

| Support Vector Machines (SVMs) | Supervised | Classification | Effective for high-dimensional data, robust to outliers | Can be computationally expensive, difficult to interpret |

| Clustering | Unsupervised | Grouping data points into clusters | Can discover hidden patterns in data, useful for exploratory analysis | Choice of clustering algorithm can impact results, difficult to evaluate cluster quality |

| Dimensionality Reduction | Unsupervised | Reducing the number of features in a dataset | Simplifies data, improves model performance, facilitates visualization | Can lose some information, difficult to determine optimal dimensionality |

| Q-Learning | Reinforcement Learning | Learning optimal policies in complex environments | Can learn from experience, suitable for tasks with delayed rewards | Requires a large amount of data, can be computationally expensive |

Machine Learning in Action

Machine learning isn’t just a theoretical concept; it’s transforming industries and impacting our daily lives. From personalized recommendations to medical diagnoses, machine learning is changing how we work, interact, and understand the world around us. Let’s explore some real-world examples of machine learning applications across different domains and delve into its societal impact.

Real-World Applications of Machine Learning

Machine learning algorithms are being implemented in various fields, revolutionizing how we approach problems and solve them. Here are some examples:

- Healthcare:Machine learning is used for disease prediction, diagnosis, and treatment planning. For instance, AI-powered systems can analyze medical images to detect cancer cells or predict the risk of heart disease. These systems can assist doctors in making more accurate diagnoses and developing personalized treatment plans.

- Finance:Machine learning algorithms are employed for fraud detection, risk assessment, and algorithmic trading. By analyzing transaction data, machine learning models can identify suspicious activities and prevent fraudulent transactions. They can also help assess creditworthiness and predict market trends, guiding investment decisions.

- E-commerce:Machine learning powers personalized product recommendations, targeted advertising, and customer segmentation. By analyzing browsing history and purchase patterns, algorithms can suggest relevant products to individual customers, enhancing their shopping experience and increasing sales.

- Transportation:Machine learning is driving innovation in autonomous vehicles, traffic management, and route optimization. Self-driving cars utilize machine learning to perceive their surroundings, make decisions, and navigate safely. Machine learning algorithms can also optimize traffic flow and predict congestion, improving transportation efficiency.

Impact of Machine Learning on Society

Machine learning has a profound impact on society, bringing both benefits and ethical considerations. Let’s explore some key aspects:

- Benefits:Machine learning offers numerous benefits, including increased efficiency, improved accuracy, and personalized experiences. It can automate tasks, reduce human error, and provide tailored solutions to individual needs. For example, in healthcare, machine learning can help diagnose diseases earlier and more accurately, leading to better treatment outcomes.

- Ethical Considerations:While machine learning offers significant advantages, it also raises ethical concerns. These include bias in algorithms, data privacy, job displacement, and the potential for misuse. For instance, algorithms trained on biased data can perpetuate existing societal inequalities. It’s crucial to address these concerns and ensure the responsible development and deployment of machine learning technologies.

Machine Learning Use Cases and Impact

Here’s a table showcasing diverse machine learning use cases with descriptions and their potential impact:

| Use Case | Description | Potential Impact |

|---|---|---|

| Image Recognition | Identifying objects and scenes in images. | Improved medical diagnosis, automated security systems, personalized image search. |

| Natural Language Processing | Understanding and generating human language. | Enhanced language translation, intelligent chatbots, automated content creation. |

| Predictive Maintenance | Predicting equipment failures before they occur. | Reduced downtime, improved safety, optimized maintenance schedules. |

| Customer Segmentation | Grouping customers based on their characteristics and behaviors. | Targeted marketing campaigns, personalized product recommendations, improved customer service. |

| Fraud Detection | Identifying fraudulent transactions and activities. | Reduced financial losses, improved security, enhanced customer trust. |

The Role of Data Scientists and Engineers

Machine learning is not a solo act; it thrives on the collaborative efforts of skilled individuals who play crucial roles in its implementation and success. Data scientists and engineers are the key players in this field, wielding their expertise to extract meaningful insights from data and build powerful machine learning models.

Responsibilities and Skills

Data scientists and engineers are responsible for the entire machine learning lifecycle, from data collection and preparation to model development, deployment, and ongoing monitoring. They require a unique blend of technical and analytical skills.

- Data Acquisition and Preparation:Data scientists and engineers are responsible for collecting, cleaning, and transforming raw data into a format suitable for machine learning algorithms. This involves handling missing values, dealing with outliers, and transforming categorical variables into numerical representations. They leverage their knowledge of data structures, databases, and data wrangling techniques to ensure data quality and consistency.

- Model Selection and Training:Data scientists select appropriate machine learning algorithms based on the problem at hand and the characteristics of the data. They then train these algorithms using the prepared data, fine-tuning hyperparameters to achieve optimal performance. This requires expertise in various machine learning algorithms, statistical modeling, and optimization techniques.

- Model Evaluation and Deployment:Once a model is trained, data scientists and engineers evaluate its performance using metrics relevant to the specific task. They deploy the model into production environments, ensuring it integrates seamlessly with existing systems and infrastructure. This involves understanding performance monitoring, version control, and continuous integration and delivery (CI/CD) principles.

7. Machine Learning and Artificial Intelligence

Machine learning and artificial intelligence (AI) are intertwined concepts, with machine learning serving as a powerful tool for building intelligent systems. To understand their relationship, it’s crucial to define AI and explore how machine learning contributes to its advancement.

The Relationship Between Machine Learning and Artificial Intelligence

Artificial intelligence (AI) refers to the ability of a computer or machine to perform tasks that typically require human intelligence, such as learning, problem-solving, and decision-making. Machine learning is a subset of AI that focuses on enabling systems to learn from data without explicit programming.

- Machine learning algorithms are designed to identify patterns and insights from data, allowing them to make predictions or decisions based on the learned information.

- AI systems leverage machine learning techniques to achieve their intelligent capabilities, allowing them to adapt and improve their performance over time.

Machine learning is a powerful tool for solving a wide range of AI tasks, including:

- Natural Language Processing (NLP):Machine learning algorithms are used to analyze and understand human language, enabling tasks like text translation, sentiment analysis, and chatbot development.

- Computer Vision:Machine learning powers image recognition, object detection, and video analysis, enabling applications like self-driving cars, medical imaging, and facial recognition.

- Robotics:Machine learning algorithms help robots learn from experience and adapt to changing environments, enabling them to perform complex tasks like manipulation, navigation, and object grasping.

- Decision-Making:Machine learning models can analyze large datasets to identify patterns and predict future outcomes, assisting in decision-making processes in various fields, such as finance, healthcare, and marketing.

The role of data is central to machine learning and AI. Data provides the foundation for training machine learning models, allowing them to learn patterns and make informed decisions. The quality and quantity of data significantly impact the performance of AI systems.

How Machine Learning Contributes to the Advancement of AI Systems

Machine learning has revolutionized AI by enabling systems to learn from data and improve their performance over time. This has led to significant advancements in various areas:

- Natural Language Processing (NLP):Machine learning algorithms have enabled AI systems to understand and generate human language with greater accuracy and fluency. For example, advanced language models like GPT-3 can generate realistic and coherent text, translate languages, and answer questions in a conversational manner.

- Computer Vision:Machine learning has drastically improved the accuracy and efficiency of image recognition and object detection tasks. AI systems powered by machine learning are now able to identify objects with high precision, enabling applications like autonomous vehicles, medical diagnosis, and security systems.

- Robotics:Machine learning algorithms allow robots to learn from their experiences, adapt to new environments, and perform complex tasks with greater autonomy. For example, robots equipped with machine learning algorithms can now navigate complex environments, grasp objects with precision, and even learn to perform tasks like surgery.

- Decision-Making:Machine learning algorithms can analyze vast amounts of data to identify patterns and predict future outcomes, empowering AI systems to make more informed decisions in areas like finance, healthcare, and marketing. For example, machine learning models are used to predict stock market trends, personalize healthcare treatments, and optimize marketing campaigns.

Machine Learning Compared to Other AI Approaches

While machine learning is a dominant force in AI, other approaches have contributed to its development. Understanding the differences between these approaches is essential for choosing the right tool for a specific AI task.

Expert Systems

Expert systems rely on predefined rules and knowledge bases to solve problems. They mimic the reasoning and decision-making processes of human experts in specific domains. For example, a medical expert system might use rules based on medical knowledge to diagnose diseases.

- Expert systems excel in tasks with well-defined rules and limited data variability.

- However, they struggle to handle complex or evolving data, requiring constant updates and maintenance as new information becomes available.

- Machine learning offers a more adaptable approach, learning from data and adjusting its behavior as new information emerges.

Rule-Based Systems

Rule-based systems use predefined rules to make decisions. These rules are typically expressed as “if-then” statements, defining the system’s behavior based on specific conditions. For example, a rule-based system for a traffic light might use rules like “if the light is red, then stop.”

- Rule-based systems are relatively easy to understand and implement, but they can be inflexible and difficult to maintain as data changes.

- Machine learning offers a more flexible approach, allowing systems to learn from data and adapt to new situations without requiring manual rule updates.

8. Challenges and Future Directions

While machine learning has achieved remarkable progress, it’s crucial to acknowledge the existing challenges and explore future directions that can further enhance its capabilities and address potential limitations.

8.1. Challenges of Current Machine Learning Techniques

The advancement of machine learning has brought about numerous applications across various domains. However, it’s important to recognize the inherent challenges that accompany these techniques. Understanding these challenges is essential for developing robust and reliable machine learning systems.

- Data Bias and Fairness: Machine learning models are trained on data, and if this data reflects biases present in society, the models may perpetuate or amplify those biases. For instance, a facial recognition system trained on a dataset predominantly composed of light-skinned individuals might struggle to accurately identify individuals with darker skin tones.

Addressing data bias requires careful data collection and preprocessing techniques, as well as the development of algorithms that are less susceptible to bias. Strategies like data augmentation, bias mitigation algorithms, and fairness-aware model training can help to minimize the impact of bias and promote fairness in machine learning systems.

- Model Interpretability and Explainability: Many machine learning models, especially deep neural networks, are often referred to as “black boxes” due to their complex internal workings. This lack of transparency makes it difficult to understand how the model arrives at its predictions. This can be a significant challenge in domains like healthcare, where decisions based on machine learning models need to be transparent and explainable to ensure patient trust and accountability.

Techniques like feature importance analysis, decision tree visualization, and rule extraction can help to shed light on the decision-making process of complex models, improving interpretability and explainability.

- Data Privacy and Security: Machine learning often relies on vast amounts of data, which raises concerns about data privacy and security. Sensitive information, such as medical records or financial data, needs to be handled with utmost care to prevent unauthorized access and misuse.

Techniques like data anonymization, differential privacy, and secure multi-party computation can help to protect data privacy while still enabling the use of data for machine learning. Additionally, robust security measures are essential to prevent data breaches and ensure the integrity of machine learning systems.

- Scalability and Efficiency: Training and deploying machine learning models, particularly for large datasets and complex tasks, can be computationally demanding and time-consuming. The ability to scale machine learning models to handle increasing data volumes and complex tasks is crucial for real-world applications.

Techniques like distributed computing, parallel processing, and model compression can help to improve the scalability and efficiency of machine learning systems.

8.2. Emerging Trends and Future Directions

Machine learning research is continuously evolving, leading to exciting new trends and promising future directions. These advancements have the potential to address some of the challenges Artikeld above and unlock new possibilities in various fields.

- Explainable AI (XAI): Explainable AI (XAI) aims to make machine learning models more transparent and understandable. XAI techniques focus on providing insights into the decision-making process of models, allowing users to understand the reasoning behind predictions. These techniques can range from simple visualizations of feature importance to more sophisticated methods that generate human-readable explanations.

XAI is particularly crucial in domains where trust and accountability are paramount, such as healthcare, finance, and legal systems.

- Federated Learning: Federated learning enables collaborative machine learning without sharing raw data. In this approach, models are trained on decentralized data sources, with updates aggregated on a central server. This approach offers significant benefits in terms of data privacy and security, as sensitive data remains on individual devices or within organizations.

Federated learning has the potential to revolutionize data analysis in fields like healthcare, where sharing patient data across institutions can be challenging due to privacy regulations.

- Reinforcement Learning: Reinforcement learning is a powerful paradigm that allows agents to learn through trial and error by interacting with their environment. This approach has shown promising results in areas like robotics, game playing, and autonomous systems. Reinforcement learning algorithms can learn optimal strategies for complex tasks by maximizing rewards over time.

As reinforcement learning techniques continue to advance, they are poised to play a significant role in automating complex tasks and optimizing decision-making processes.

- Generative AI: Generative AI models are capable of creating new data, such as images, text, and music, that resemble real-world examples. Techniques like Generative Adversarial Networks (GANs) have revolutionized creative fields by enabling the generation of realistic and novel content. Generative AI has the potential to transform industries like art, music, and entertainment, opening up new avenues for creativity and expression.

9. Machine Learning Libraries and Tools

Machine learning libraries and tools are essential components of the machine learning ecosystem, providing developers with pre-built functions, algorithms, and frameworks that simplify and accelerate the process of building and deploying machine learning models. These libraries abstract away the complexities of low-level programming, allowing practitioners to focus on the core aspects of model development, such as data preparation, feature engineering, model selection, and evaluation.This section will delve into three popular Python libraries: scikit-learn, TensorFlow, and PyTorch.

These libraries have become industry standards, offering a wide range of functionalities and catering to diverse machine learning applications.

Scikit-learn: A Comprehensive Machine Learning Library

Scikit-learn is a widely used Python library for machine learning, known for its simplicity, efficiency, and comprehensive set of algorithms. It provides a user-friendly interface, making it suitable for both beginners and experienced practitioners.

Key Functionalities

- Classification:Scikit-learn offers various classification algorithms, including logistic regression, support vector machines (SVMs), decision trees, random forests, and naive Bayes. These algorithms are used to predict categorical labels, such as classifying emails as spam or not spam.

- Regression:For predicting continuous values, scikit-learn provides algorithms like linear regression, polynomial regression, and support vector regression. These are useful for tasks like predicting house prices or stock market trends.

- Clustering:Scikit-learn includes algorithms for grouping data points into clusters based on their similarity. Popular clustering algorithms include K-means, hierarchical clustering, and DBSCAN.

- Dimensionality Reduction:To handle high-dimensional data, scikit-learn offers techniques like Principal Component Analysis (PCA) and t-SNE, which reduce the number of features while preserving important information.

- Model Selection and Evaluation:Scikit-learn provides tools for model selection, hyperparameter tuning, and performance evaluation. These tools help in choosing the best model for a given task and assessing its accuracy and generalization ability.

Advantages of Scikit-learn

- Ease of Use:Scikit-learn has a straightforward API and well-documented functions, making it easy to learn and use, even for beginners.

- Comprehensive Algorithms:The library provides a wide range of algorithms covering various machine learning tasks, from classification and regression to clustering and dimensionality reduction.

- Efficiency:Scikit-learn is optimized for performance and can handle large datasets efficiently.

- Community Support:With a large and active community, scikit-learn benefits from extensive documentation, tutorials, and forums, making it easy to find help and support.

Applications of Scikit-learn

- Spam Filtering:Scikit-learn’s classification algorithms can be used to build spam filters that automatically identify and block unwanted emails.

- Image Recognition:Scikit-learn’s image processing capabilities can be used for tasks like object detection and image classification.

- Customer Segmentation:Clustering algorithms can be used to group customers based on their purchasing behavior, allowing businesses to tailor marketing campaigns more effectively.

- Fraud Detection:Anomaly detection algorithms can be used to identify unusual patterns in financial transactions, helping to prevent fraudulent activities.

TensorFlow: A Powerful Deep Learning Framework

TensorFlow is an open-source machine learning framework developed by Google, renowned for its flexibility, scalability, and support for deep learning. It provides a powerful computational graph framework that allows users to define and execute complex machine learning models.

Key Functionalities

- Deep Learning:TensorFlow excels in deep learning, providing tools for building and training various neural network architectures, including convolutional neural networks (CNNs) for image recognition, recurrent neural networks (RNNs) for natural language processing, and generative adversarial networks (GANs) for image generation.

- Tensor Operations:TensorFlow leverages tensors, multi-dimensional arrays, for efficient numerical computations. It provides a rich set of operations for manipulating tensors, making it suitable for large-scale data processing.

- Automatic Differentiation:TensorFlow automatically calculates gradients, which are crucial for optimizing neural networks during training. This feature simplifies the process of backpropagation and model optimization.

- Deployment:TensorFlow offers tools for deploying trained models on various platforms, including web servers, mobile devices, and embedded systems.

Advantages of TensorFlow

- Scalability:TensorFlow is designed for large-scale machine learning tasks and can leverage distributed computing resources to train models efficiently on massive datasets.

- Flexibility:TensorFlow allows users to define custom models and algorithms, providing a high degree of flexibility in model development.

- Community Support:As a popular framework, TensorFlow benefits from a large and active community, offering extensive documentation, tutorials, and support forums.

- Industry Adoption:TensorFlow is widely used in industry, making it a valuable skill for professionals in the field of machine learning.

Applications of TensorFlow

- Image Recognition:CNNs built using TensorFlow are used for tasks like object detection, image classification, and facial recognition.

- Natural Language Processing:RNNs and transformer models built with TensorFlow are used for tasks like machine translation, text summarization, and sentiment analysis.

- Speech Recognition:TensorFlow is used to build speech recognition systems that can transcribe spoken language into text.

- Drug Discovery:TensorFlow is used in drug discovery research to analyze molecular structures and predict the effectiveness of potential drug candidates.

PyTorch: A Dynamic and Flexible Deep Learning Framework

PyTorch is another popular open-source deep learning framework, known for its dynamic computational graph, ease of use, and strong support for research. It provides a Python-centric interface, making it easy to integrate with other Python libraries and tools.

Key Functionalities

- Dynamic Computational Graph:PyTorch’s dynamic computational graph allows for flexible model definition and modification during runtime, making it suitable for research and experimentation.

- Tensor Operations:Similar to TensorFlow, PyTorch uses tensors for efficient numerical computations and provides a wide range of operations for tensor manipulation.

- Automatic Differentiation:PyTorch automatically calculates gradients, simplifying the optimization process for neural networks.

- Modular Design:PyTorch’s modular design allows users to easily combine different components and create custom models.

Advantages of PyTorch

- Ease of Use:PyTorch’s Pythonic interface and intuitive API make it relatively easy to learn and use, even for beginners.

- Flexibility:PyTorch’s dynamic computational graph allows for greater flexibility in model design and experimentation.

- Research Focus:PyTorch is widely used in research, making it a valuable tool for exploring new ideas and developing cutting-edge models.

- Community Support:PyTorch benefits from a large and active community, offering extensive documentation, tutorials, and support forums.

Applications of PyTorch

- Computer Vision:PyTorch is used for building various computer vision applications, including object detection, image classification, and video analysis.

- Natural Language Processing:PyTorch is used for tasks like machine translation, text summarization, and sentiment analysis.

- Robotics:PyTorch is used in robotics applications for tasks like motion planning, object manipulation, and autonomous navigation.

- Reinforcement Learning:PyTorch is used to build reinforcement learning agents that can learn from experience and make optimal decisions.

Comparison of Machine Learning Libraries

| Library Name | Key Features | Applications | Advantages |

|---|---|---|---|

| Scikit-learn | Classification, regression, clustering, dimensionality reduction, model selection and evaluation | Spam filtering, image recognition, customer segmentation, fraud detection | Ease of use, comprehensive algorithms, efficiency, community support |

| TensorFlow | Deep learning, tensor operations, automatic differentiation, deployment tools | Image recognition, natural language processing, speech recognition, drug discovery | Scalability, flexibility, community support, industry adoption |

| PyTorch | Dynamic computational graph, tensor operations, automatic differentiation, modular design | Computer vision, natural language processing, robotics, reinforcement learning | Ease of use, flexibility, research focus, community support |

10. The Importance of Ethics in Machine Learning

Machine learning, a powerful tool for driving innovation and solving complex problems, also carries significant ethical implications. As algorithms become increasingly sophisticated and integrated into various aspects of our lives, it’s crucial to consider the ethical considerations surrounding their development and deployment.

Ethical Considerations in Machine Learning Development

The development and deployment of machine learning models raise critical ethical considerations. These models are often used to make decisions that impact individuals and society, making it imperative to ensure they are fair, unbiased, and transparent.

- Real-world Scenarios:Consider the use of machine learning in loan applications. A biased algorithm could unfairly deny loans to individuals based on factors like race or gender, perpetuating existing inequalities. In healthcare, biased algorithms could lead to misdiagnosis or inappropriate treatment, impacting patient outcomes.

- Consequences of Biased Algorithms:The potential consequences of biased or unfair algorithms are far-reaching. They can exacerbate existing social inequalities, erode trust in technology, and lead to discriminatory outcomes. For instance, biased algorithms in criminal justice systems could contribute to racial disparities in sentencing.

- Data Privacy and Security:Data privacy and security are fundamental ethical considerations in machine learning. The data used to train models must be collected, stored, and used responsibly. Violations of privacy or breaches of security can have severe consequences, impacting individuals and organizations.

Identifying Potential Biases and Fairness Issues

Machine learning algorithms are susceptible to biases introduced during data collection, feature engineering, and model training.

- Data Collection Biases:The data used to train machine learning models often reflects existing societal biases. For example, if a dataset used to train a hiring algorithm is skewed towards a particular demographic, the algorithm may perpetuate those biases, leading to unfair hiring practices.

- Feature Engineering Biases:The selection and engineering of features can also introduce biases. For instance, using proxies like zip code or credit score in a loan application algorithm could perpetuate existing socioeconomic disparities.

- Model Training Biases:Model training techniques can also contribute to bias. If a model is trained on data that is not representative of the population it will be used on, it may produce biased predictions.

- Impact of Biased Algorithms:Biased algorithms can have a significant impact on individuals and communities. For instance, a biased facial recognition system could lead to wrongful arrests or misidentifications, disproportionately affecting marginalized communities.

- Ethical Implications in Decision-Making:The ethical implications of algorithmic decision-making are particularly relevant in areas like loan approvals, hiring, and criminal justice. Biased algorithms can perpetuate discrimination and exacerbate existing inequalities.

Building Ethical and Responsible Machine Learning Systems

Building ethical and responsible machine learning systems requires a comprehensive approach that addresses potential biases and ensures fairness and transparency.

- Framework for Ethical Machine Learning:A framework for ethical machine learning development should include principles, guidelines, and methodologies to ensure responsible AI development. These frameworks should address data privacy, fairness, transparency, accountability, and human oversight.

- Data Diversity and Fairness Metrics:Data diversity is crucial for mitigating bias in machine learning. Ensuring that the data used to train models is representative of the population it will be used on can help reduce bias. Fairness metrics, such as accuracy parity and equalized odds, can be used to assess the fairness of machine learning models.

- Explainability and Transparency:Explainability refers to the ability to understand how a machine learning model makes predictions. Transparency involves providing clear and accessible information about the data used, the algorithms employed, and the potential biases in the model.

- Implementing Ethical Machine Learning Practices:Companies and organizations can implement ethical machine learning practices by establishing clear ethical guidelines, conducting bias audits, and involving stakeholders in the development and deployment of AI systems.

- Human Oversight and Accountability:Human oversight and accountability are essential for responsible AI development. Humans should be involved in the development, deployment, and monitoring of machine learning systems to ensure ethical considerations are addressed.

Machine Learning for Business

Machine learning is no longer just a futuristic concept; it’s a powerful tool transforming businesses across industries. By leveraging the ability of computers to learn from data, organizations can gain valuable insights, optimize operations, and unlock new opportunities for growth.

Benefits of Machine Learning for Businesses

Machine learning offers a wide range of benefits for businesses, enabling them to make better decisions, improve efficiency, and enhance customer experiences.

- Customer Segmentation: Machine learning algorithms can analyze customer data to identify distinct groups with similar characteristics, allowing businesses to tailor marketing campaigns and product offerings to specific segments. This personalized approach can lead to higher conversion rates and increased customer satisfaction.

- Fraud Detection: Machine learning models can detect fraudulent activities in real-time by analyzing patterns in transaction data and identifying anomalies. This helps businesses prevent financial losses and protect their customers from scams.

- Predictive Analytics: Machine learning algorithms can predict future outcomes based on historical data, allowing businesses to anticipate trends, make informed decisions, and optimize resource allocation. For example, predicting customer churn can help businesses proactively retain valuable customers.

Examples of Machine Learning in Business

Numerous companies have successfully implemented machine learning solutions to address various business challenges and drive innovation.

- Netflixuses machine learning to recommend movies and TV shows to its subscribers, based on their viewing history and preferences. This personalized recommendation system has significantly increased user engagement and satisfaction.

- Amazonleverages machine learning for product recommendations, targeted advertising, and supply chain optimization. The company’s recommendation engine, powered by machine learning, is a key driver of its online sales growth.

- Uberuses machine learning to optimize ride pricing, predict demand, and manage its driver network. This data-driven approach has enabled Uber to provide efficient and affordable transportation services to millions of users.

12. The Future of Machine Learning

Machine learning (ML) is rapidly evolving, transforming various industries and aspects of our lives. From healthcare to finance, manufacturing to entertainment, ML’s impact is undeniable and continues to expand. As we move forward, it’s crucial to understand the potential implications of this technology on various sectors, society, and the future of work.

This chapter explores the future of machine learning, analyzing its potential impact on industries, examining emerging trends, and addressing the challenges and opportunities it presents.

Industry Impact

Machine learning is poised to revolutionize numerous industries, transforming how we work, live, and interact with the world around us. By analyzing vast amounts of data and identifying patterns, ML can automate tasks, optimize processes, and generate insights that were previously impossible.

Let’s examine the potential impact of machine learning on three specific industries: healthcare, finance, and manufacturing.

Healthcare

Machine learning is transforming healthcare by improving diagnosis, treatment, and drug discovery.

Industry: Healthcare Impact: Benefits: Risks: Ethical Considerations: Personalized medicine, early disease detection, drug discovery, and automated medical tasks. Improved patient outcomes, reduced healthcare costs, and accelerated medical research. Data privacy concerns, algorithmic bias, and potential job displacement. Ensuring equitable access to AI-powered healthcare, addressing bias in algorithms, and protecting patient privacy. For instance, ML algorithms can analyze medical images to detect cancer at earlier stages, leading to more effective treatments and better patient outcomes. Similarly, ML can assist in drug discovery by identifying potential drug candidates and predicting their effectiveness, accelerating the process of bringing new therapies to market.

The use of ML in healthcare has the potential to significantly improve patient care and reduce healthcare costs. However, it’s essential to address the risks and ethical considerations associated with this technology, such as data privacy concerns, algorithmic bias, and the potential for job displacement.

Finance

Machine learning is revolutionizing the financial industry by automating tasks, improving fraud detection, and personalizing financial services.

Industry: Finance Impact: Benefits: Risks: Ethical Considerations: Automated trading, fraud detection, risk assessment, and personalized financial advice. Increased efficiency, reduced risk, and improved customer experiences. Algorithmic bias, financial instability, and potential for market manipulation. Ensuring fair and transparent financial practices, mitigating algorithmic bias, and protecting customer data. ML algorithms can analyze vast amounts of financial data to identify patterns and predict market trends, enabling automated trading and risk assessment. These algorithms can also detect fraudulent transactions in real-time, protecting financial institutions and customers from losses. However, the use of ML in finance also presents risks, such as algorithmic bias, which can lead to unfair or discriminatory outcomes.

Additionally, the potential for market manipulation and financial instability must be carefully considered.

Manufacturing

Machine learning is transforming manufacturing by improving efficiency, quality control, and predictive maintenance.

Industry: Manufacturing Impact: Benefits: Risks: Ethical Considerations: Predictive maintenance, quality control, process optimization, and automated production. Increased efficiency, reduced downtime, and improved product quality. Job displacement, potential for safety hazards, and reliance on complex algorithms. Ensuring safe and ethical use of ML in manufacturing, addressing potential job displacement, and promoting transparency in decision-making. ML algorithms can analyze sensor data from machines to predict potential failures, enabling preventative maintenance and reducing downtime. Similarly, ML can be used to identify defects in products during production, ensuring high-quality products and reducing waste. The use of ML in manufacturing has the potential to significantly improve efficiency, productivity, and product quality.

However, it’s essential to address the risks associated with this technology, such as potential job displacement and the need for robust safety protocols.

The transformative impact of machine learning on these industries is undeniable. It has the potential to improve efficiency, reduce costs, and create new products and services. However, it’s crucial to acknowledge the risks and ethical considerations associated with this technology and to ensure its responsible development and deployment.

Societal Impact

The potential societal impact of machine learning is vast and multifaceted. As ML permeates various aspects of our lives, it raises crucial questions about employment, privacy, and social equity.

One of the most significant concerns is the potential impact of ML on employment. As algorithms become more sophisticated, they can automate tasks previously performed by humans, potentially leading to job displacement. However, ML also creates new opportunities in fields such as data science, AI development, and ML engineering.

The key to mitigating job displacement is to focus on reskilling and upskilling the workforce, equipping individuals with the skills needed to thrive in the AI-powered economy.

Another critical concern is the potential impact of ML on privacy. ML algorithms rely on vast amounts of data, which can include sensitive personal information. It’s crucial to ensure the responsible use of this data to protect individual privacy. This includes implementing strong data security measures, obtaining informed consent from individuals, and establishing clear guidelines for data collection and usage.

Finally, the potential for bias and discrimination in ML algorithms raises concerns about social equity. If training data is biased, the resulting algorithms can perpetuate existing inequalities. It’s crucial to develop and deploy ML algorithms that are fair, unbiased, and transparent.

This includes addressing bias in training data, using diverse datasets, and implementing mechanisms to monitor and mitigate algorithmic bias.

Machine Learning in Healthcare

Machine learning is revolutionizing healthcare by improving diagnoses, treatment plans, and drug discovery. It analyzes massive datasets to identify patterns and trends, enabling more precise and personalized care.

Applications of Machine Learning in Healthcare

Machine learning algorithms are being used in a wide range of healthcare applications, including:

- Disease Prediction:Machine learning models can analyze patient data, such as medical history, genetic information, and lifestyle factors, to predict the likelihood of developing certain diseases. This allows for early intervention and preventive measures.

- Diagnosis:Machine learning algorithms can assist in diagnosing diseases by analyzing medical images, such as X-rays, MRIs, and CT scans. These algorithms can identify subtle patterns that may be missed by human eyes, leading to more accurate and timely diagnoses.

- Treatment Planning:Machine learning models can help doctors personalize treatment plans based on a patient’s individual characteristics and disease stage. This can lead to more effective and targeted treatments, reducing the risk of side effects and improving patient outcomes.

- Drug Discovery:Machine learning is being used to accelerate drug discovery by identifying promising drug candidates and optimizing drug development processes. These algorithms can analyze large datasets of chemical compounds and biological data to predict drug efficacy and safety.

- Personalized Medicine:Machine learning enables the development of personalized medicine, where treatment plans are tailored to each patient’s unique genetic makeup and other factors. This approach can improve treatment effectiveness and reduce the risk of adverse reactions.

Ethical Considerations in Machine Learning for Healthcare

While machine learning offers significant potential for improving healthcare, it also raises ethical concerns:

- Patient Privacy and Data Security:Healthcare data is highly sensitive, and it is crucial to ensure that patient privacy is protected when using machine learning algorithms. Robust data security measures are essential to prevent unauthorized access and data breaches.

- Algorithmic Bias:Machine learning algorithms can perpetuate existing biases in healthcare data, leading to unfair or discriminatory outcomes. It is essential to develop algorithms that are fair and unbiased, and to monitor their performance for potential bias.

- Transparency and Explainability:Machine learning models can be complex and difficult to interpret. It is important to develop transparent and explainable algorithms, so that healthcare providers can understand how the models are making decisions and ensure they are clinically sound.

- Access and Equity:The benefits of machine learning in healthcare should be accessible to all patients, regardless of their socioeconomic status or location. It is important to address disparities in access to technology and data, and to ensure that machine learning solutions are developed with equity in mind.

Examples of Successful Machine Learning Applications in Healthcare

- IBM Watson for Oncology:This system uses machine learning to analyze patient data and provide personalized cancer treatment recommendations. It has been used to improve treatment outcomes and reduce the time it takes to develop a treatment plan.

- Google’s DeepMind AI for Diabetic Retinopathy:This system uses deep learning to analyze retinal images and detect diabetic retinopathy, a leading cause of blindness. It has been shown to be as accurate as human specialists in identifying the condition.

- Cardiogram’s Heart Health Monitoring:This app uses machine learning to analyze heart rate data from wearable devices to detect potential heart problems. It has been shown to be effective in identifying irregular heart rhythms and other cardiovascular issues.

Machine Learning in Finance: Which Statement About Machine Learning Is True

Machine learning is rapidly transforming the financial industry, bringing new possibilities and efficiencies to traditional processes. From fraud detection and risk assessment to investment management and customer service, machine learning is proving to be a powerful tool for financial institutions.

Fraud Detection

Machine learning algorithms are highly effective in identifying fraudulent transactions. By analyzing vast amounts of data, including transaction history, customer behavior, and market trends, these algorithms can detect patterns and anomalies that may indicate fraudulent activity. This allows financial institutions to proactively prevent fraud and minimize losses.

Risk Assessment

Machine learning can also be used to assess risk in various financial contexts. For example, it can be used to evaluate the creditworthiness of borrowers, predict the likelihood of default on loans, and assess the risk of investments. By analyzing historical data and market trends, machine learning models can provide more accurate and nuanced risk assessments than traditional methods.

Investment Management

Machine learning is revolutionizing investment management by enabling more sophisticated and data-driven approaches. Algorithmic trading, powered by machine learning, allows for faster and more efficient execution of trades based on real-time market data analysis. Machine learning can also be used to identify investment opportunities, optimize portfolio allocation, and manage risk.

Challenges and Opportunities

While machine learning offers significant advantages in finance, there are also challenges and opportunities to consider.

Regulatory Compliance and Data Security

Financial institutions face strict regulations regarding data privacy and security. Machine learning models often rely on large datasets, which raises concerns about data security and compliance with regulations like GDPR. Ensuring that machine learning applications adhere to these regulations is crucial for maintaining trust and avoiding legal issues.

Transparency and Explainability

Machine learning models can be complex and difficult to understand. This lack of transparency can be a challenge, especially when making critical financial decisions. Efforts are underway to develop more transparent and explainable machine learning models, but this remains an ongoing area of research.

Data Quality and Bias

The performance of machine learning models depends heavily on the quality and completeness of the data used for training. Biased or incomplete data can lead to biased predictions and unfair outcomes. It’s essential to ensure that the data used for training machine learning models in finance is accurate, representative, and unbiased.

Examples of Successful Machine Learning Applications in Finance

- Algorithmic Trading:Hedge funds and investment banks are increasingly using algorithmic trading systems powered by machine learning to execute trades automatically based on real-time market data. This allows for faster and more efficient execution, potentially leading to higher returns.

- Credit Scoring:Machine learning is used by lenders to assess the creditworthiness of borrowers and determine loan eligibility. By analyzing data such as credit history, income, and spending patterns, machine learning models can provide more accurate and personalized credit scores.

- Fraud Detection:Financial institutions are employing machine learning to detect fraudulent transactions in real-time. By analyzing patterns in transaction data, these algorithms can identify suspicious activities and flag them for further investigation.

15. Machine Learning in Education

Machine learning is revolutionizing various fields, and education is no exception. Its ability to analyze vast amounts of data and identify patterns opens up exciting possibilities for personalized learning, improved student outcomes, and efficient educational practices.

Personalization & Outcomes

Machine learning algorithms can analyze student data, such as learning styles, performance history, and engagement patterns, to tailor educational content and pace to individual needs. This personalized approach has the potential to significantly impact student motivation, engagement, and academic achievement.

- Tailored Learning Experiences:By analyzing student data, machine learning algorithms can identify individual strengths and weaknesses, allowing educators to create customized learning paths and provide targeted support. For example, a student struggling with algebra might receive additional practice problems or be directed to specific learning resources tailored to their needs.

- Increased Student Motivation and Engagement:When students receive personalized learning experiences, they are more likely to feel challenged and engaged. This can lead to increased motivation and a greater sense of ownership over their learning.

- Improved Academic Achievement:Research suggests that personalized learning can lead to significant improvements in student performance. A study by the Bill & Melinda Gates Foundation found that students who participated in personalized learning programs showed an average 10% increase in academic achievement.

- Predictive Analytics:Machine learning can be used to predict student performance and identify at-risk learners. This allows educators to intervene early and provide targeted support to students who may be struggling. For example, a machine learning model could identify students who are likely to drop out of school based on factors such as attendance, grades, and engagement.

Addressing Challenges

Machine learning can be a powerful tool for addressing various challenges in education.

- Automating Tasks:Machine learning can automate tasks such as grading, providing feedback, and generating personalized learning plans, freeing up teacher time for more personalized instruction and interaction with students. For example, machine learning algorithms can be used to grade multiple-choice questions or essays, providing students with instant feedback.

- Engaging Learning Experiences:Machine learning can be used to develop engaging and interactive learning experiences that address diverse learning styles and needs. For example, machine learning algorithms can create personalized simulations, games, and interactive exercises that make learning more enjoyable and effective.

- Ethical Considerations:It’s crucial to address the ethical considerations surrounding the use of machine learning in education. Data privacy, bias in algorithms, and the potential for technology to exacerbate existing inequalities are critical concerns that need to be addressed. For example, it’s important to ensure that machine learning algorithms are not biased against certain groups of students based on factors such as race, gender, or socioeconomic status.

Successful Applications

Machine learning is already being used in various successful applications in education.

- Adaptive Learning Platforms:Platforms like Khan Academy, Duolingo, and Coursera use machine learning to adjust the difficulty of lessons based on student performance. For example, if a student consistently answers questions correctly, the platform will present more challenging material. If a student struggles, the platform will provide additional support and resources.

- Automated Grading Systems:Machine learning is being used to automate the grading of essays, code, and other assignments, freeing up teacher time for more personalized instruction and feedback. For example, machine learning algorithms can be used to evaluate the quality of student writing, identify plagiarism, and provide feedback on code assignments.

- Intelligent Tutoring Systems:Systems like Carnegie Learning and ALEKS use machine learning to provide individualized tutoring and feedback to students. These systems can identify students’ learning gaps and provide targeted instruction and practice exercises.

Key Questions Answered

What are the main types of machine learning?

The three main types of machine learning are supervised learning, unsupervised learning, and reinforcement learning. Supervised learning involves training a model on labeled data, while unsupervised learning deals with unlabeled data. Reinforcement learning focuses on training agents to make decisions in an environment by rewarding desired actions.

What are some real-world applications of machine learning?

Machine learning is used in a wide range of applications, including image recognition, natural language processing, fraud detection, medical diagnosis, and self-driving cars.

What are some challenges in machine learning?

Some of the challenges in machine learning include data bias, model interpretability, data privacy, and scalability. Addressing these challenges is crucial for ensuring the responsible and ethical development and deployment of machine learning systems.